Transparency, Trust, and Proprietary Predictive Analytics

This post was originally published on Medium

A particularly good talk at Strata NY last year was by Brett Goldstein, former CIO of Chicago, who talked about accountability and transparency in predictive models that affect people’s lives. This struck a strong chord with me, so I wanted to take some time to write down some thoughts. (And a rather longer time to publish those thoughts…) I’m sure others’ have thought about this more and have better takes on this — please comment and provide links!

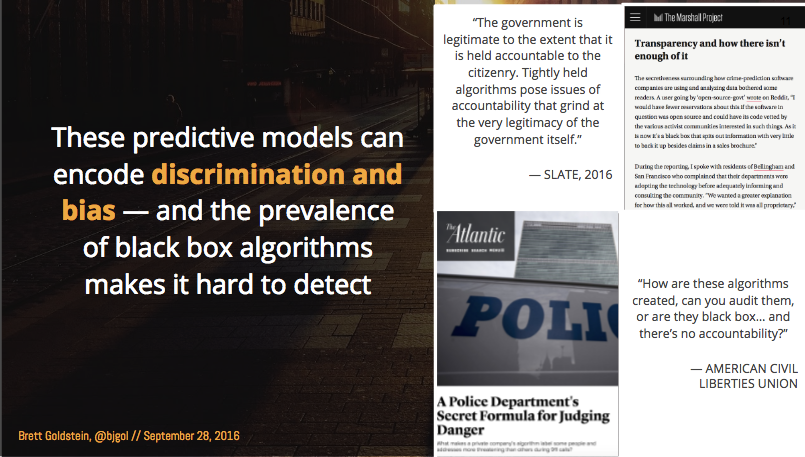

A slide from Goldstein’s Strata presentation.

A slide from Goldstein’s Strata presentation.

There has been a lot of discussion recently about accountability in predictive models, and the failures of certain systems to avoid troublesome racial bias, in particular. ProPublica wrote an insightful report about a criminal justice model that tries to estimate the likelihood of recidivism, to help courts with sentencing, but that appears to treat racial minorities more harshly. Cathy O’Neil has a new book out about all the ways that unaccountable algorithms can hurt rather than help society. Computer Scientists are trying to find ways of measuring and correcting disparate impact in predictive algorithms. For those of us who build models that affect peoples’ lives, we need to be highly attuned to this issue, and focused on finding ways to do the right thing.

In general, transparent algorithms have the advantage of, potentially, more eyes on them, reducing the likelihood that an error or oversight results in a bad outcome. Complex algorithms — and machine learning systems are often quite complex — can be fragile in ways that lead to subtle failures and poor predictions. Because of this, Goldstein asserted that there will be a push by customers of these algorithms (governments and public institutions, mostly) to require that the predictive models be open to inspection and validation.

These are top-down reasons for transparency in predictive algorithms — the people who are writing the checks are wanting to know that the system works as it should. But there are also bottom-up reasons for transparency, and that’s the development of trust. In many cases, predictive models do not directly translate into a decision or policy, but instead inform a human who must take the prediction into account while making a decision. People using these predictions want to trust that the models are working well, and will help them do their jobs better.

Trust can arise from several avenues. Providing the code or algorithm is not likely to be helpful for most non-technical end-users, but in some limited cases that it could be. In more cases, access to the people who implemented the system, and the ability to constructively ask questions, can be highly reassuring, although this approach clearly doesn’t scale. Also, just as many people trust open-source software simply because of its openness, transparent predictive models might be more trustworthy simply because the openness helps keep the data scientist honest.

Where does this put data scientists and companies who want to create businesses based on predictive models? It seems to me that a fully open system can only rarely be the basis of a business with recurring revenue. (A consulting business is profitable, but does not have recurring revenue.) That is, if you’re creating a predictive model of, say, hospital readmission likelihood, you’d like to be able to sell predictions of readmission, for individual patients, on an ongoing basis. If instead you were required to sell and share an interpretable model — say one of MIT Professor Cynthia Rubin’s 3x5 card-sized rule lists — you would have to charge up front for the model, which is not likely to provide enough revenue to keep your business going. Trying to sell the same customer a slightly better rule list the next year is not too likely to be successful. (Note that if the predictions are part of a larger, proprietary system such as an otherwise-compelling workflow tool, you may have a shot.)

An example of a very interpretable, not very predictive, and not at all proprietary predictive model. (source)

An example of a very interpretable, not very predictive, and not at all proprietary predictive model. (source)

So, there’s an inherent tension here. You want to sell predictions, not the model, to institutions that want to know what you built, so they can ensure it’s making predictions that are ethical and aligned with their goals. And you want the people using your model to make decisions to trust the model, so they can get the full value out of it. But you have to maintain some sort of competitive advantage, so your customer can’t trivially take your model in-house, and your competitors can’t just copy the model off of a web site. How do you resolve this?

To ground this, let me briefly review what several previous employers of mine do, focusing on three aspects of transparency: the data used, the way the data is transformed (feature engineered), and the core model itself.

A few years ago, I worked for Sentrana, a company that provides sales and marketing software-as-a-service to large enterprises, mostly in the food manufacturing and delivery vertical. The outputs of the model were mostly recommendations: of prices, or cross-sell opportunities, or similar. For Sentrana, the data was primarily the customer’s own data, supplemented by some open (i.e., government) and proprietary (3rd party) data; there was extensive proprietary feature engineering to get the data into shape; and the models themselves were mostly highly proprietary, based on intense analysis of the underlying microeconomic processes. The results were impressive, but not at all transparent to the customers. As the software was sold to the upper management of very hierarchical businesses, the customer was in a position to rely on high-level improvements in business results, rather than model transparency, and to insist that lower-level employees use the recommendations in their day-to-day work, even without transparency providing trust. Because of the proprietary nature of the feature engineering and models, the customers could not take the model in-house easily, and there was substantial competitive advantage.

Until recently, I worked at EAB, a company that (among other things) provides a workflow tool for college academic advisors that includes a predictive model of student success (graduation). The output of that model is primarily a likelihood of graduation, which can be used to identify and intervene with students at risk who may fall through traditional GPA-threshold cracks. The data is mostly the member institutions’ academic data, and the company states openly to its customers (when technically sophisticated people ask) that the core model is more-or-less an off-the-shelf penalized logistic regression with standard best-practices for training, but the feature engineering and framework required to use that off-the-shelf model to get good results is proprietary and necessarily quite intricate. Much of the feature engineering and the specific way the core model was applied remains a trade secret and a barrier for both member institutions and competitors to replicate it. A potential trust issue is that the actual predictions of the model (“this student is at high risk of not graduating”) are not transparent or explainable to end-users. A great deal of effort has been expended by the firm to convince advisors to trust the models, and to use the results to prioritize their efforts with students. From the point of view of the EAB Data Science team, we could have tried to use maximally simple, interpretable models, but we preferred to focus on improving model predictive quality and flexibility, maintaining the value of the proprietary components of the system. That’s probably the right call, but it’s not an easy one.

I recently worked for a while at an HR analytics software-as-a-service firm that provides predictions of tenure for job applicants, to reduce the cost and impact on service quality of turnover. In that case, the data is primarily proprietary — the results of a screening survey taken by job applicants, supplemented by some of the client’s data. The feature engineering is also proprietary, so even though the clients know what questions are asked, they don’t know how to make use of those questions. As with EAB’s model, the value of the system and the barrier to entry is the way the company uses its own data, and transforms that data. The scores generated by the model are used by the hiring teams as an informative data point in their hire/no-hire decisions, improving outcomes, but as of when I worked there, did not come with an explanation.

It seems to me that you can create a business with recurring revenue (i.e., not a consulting engagement) as long as some aspect of the data → feature engineering → model pipeline is proprietary and hard to copy. A fully proprietary model has little hope of transparency, and will probably be buggy to boot. A great situation is if you have proprietary data. Google is more than happy to tell you all about how they build fancy deep learning systems for photo and speech recognition, translation, and many other things. You don’t have their data set, though, so you can’t possibly replicate their results, even if you have their tools.

But in many business cases, as a predictive model vendor, you’re using the customer’s own data. If you’d prefer to use an off-the-shelf model — and you probably should — then your only option for preventing your customer from reimplementing your algorithm is to carefully and insightfully apply your “substantive expertise” to the problem, building engineered features better than anyone else can, and to preserve those insights as trade secrets.

I’d like to finally mention a recent new technique that might allow end-users to have partial transparency, and to develop trust, even while proprietary aspects of the algorithm remain secret. LIME (Local Interpretable Model-Agnostic Explanations) was developed by Marco Tulio Ribeiro at the University of Washington, along with several colleagues. Briefly, it identifies, on a case-by-case basis, what few aspects of the input, when changed, would most substantially yield a different output. When applied to a prediction from a student graduation model, for instance, it might identify a GPA trend and a major as being the most relevant pieces of information, for a specific student. This is in contrast to traditional variable importance measures, which identify the most relevant inputs overall, which may not provide much insight into a particular case. LIME promises to provide some insight into individual predictions, which may help provide trust (or reasons to ignore a particular outlying prediction, which is also valuable) for end-users. Importantly, the use of LIME doesn’t allow the model to be easily reverse-engineered, and it should be possible to provide explanations at the level of the raw features, not the engineered features. For instance, staying with the student graduation model, if the particular way that GPA trends are calculated and used is proprietary, then LIME may be able to say that a student is at high risk because of the 3.1, 2.8, and 2.5 GPAs of the last 3 terms, without exposing the actual engineered input to the model.

I haven’t yet had a chance to apply LIME to any systems I’ve worked on, but I’m interested to try it out. If any readers have experience with LIME or related techniques in production in a software-as-a-service setting, I’d love to read your thoughts.

Thanks to Carl Anderson and Brett Goldstein for very helpful comments on a draft of this post!